At Brainjar, we are real Apple fans. Most of us use a MacBook Pro, and when Apple released the new MacBooks with upgraded hardware (M2 Pro and M2 Max chips), we couldn’t wait to order them and test them out. Of course, as we are all AI engineers and data scientists, we couldn’t let the opportunity pass by to test the M2 chips and benchmark them.

The M2 Pro and Max

Apple wouldn’t be Apple if they didn’t use the best marketing slogans to describe their new releases. The M2 Pro and M2 Max are called the “next-generation chips for next-level workflows”, but do they do the trick? Will they bring the performance to new heights? We’ll test this later, but first, let’s look at some specs. The M2 Pro has up to 12 CPU cores and 19 GPU cores, while the M2 Max has 12 CPU cores and up to 38 GPU cores. Both chips offer more cores than their respective M1 versions.

Important to note, contrary to most PCs, Apple has a Unified Memory Architecture since the release of M1. The concept of unified memory means that the available RAM is on the M1 system-on-a-chip. This reduces the distance data has to travel to get to the CPU or GPU, resulting in faster memory bandwidth for both the CPU and graphics card.

Of course, Apple also has their Apple Neural Engine (ANE), a specialized neural processing unit faster and more energy efficient than the CPU or GPU. However, for this blog, we did not benchmark the ANE as it only works with Core ML; this is Apple’s framework for developers to build, train, and run their ML models on device. We use TensorFlow or PyTorch for our work, so we decided not to include this in our benchmark.

Benchmarking

So, our aim is clear: we want to research if Apple’s M2 chips are adequate for data scientists to use in their day-to-day tasks, such as model training. As a benchmark, we have chosen to train a Resnet50. A relatively old computer vision model, but with its maturity comes the advantage that it has become a “golden standard” of delivering good results and swift performance. Moreover, most hardware has been optimized to work well with this type of model.

⚠️ Disclaimer: You can do this benchmark with other models (which may have different requirements), which means that this comparison may not necessarily apply to other benchmarks. However, this is a good benchmark as it gets the most out of the hardware. You can always check out other benchmarks here.

We used this command to run our benchmark:

python tf2-benchmarks.py --model resnet50 --xla --batch_size 64 --num_gpus 1

--train_steps 100 --train_epochs 1 --skip_eval true

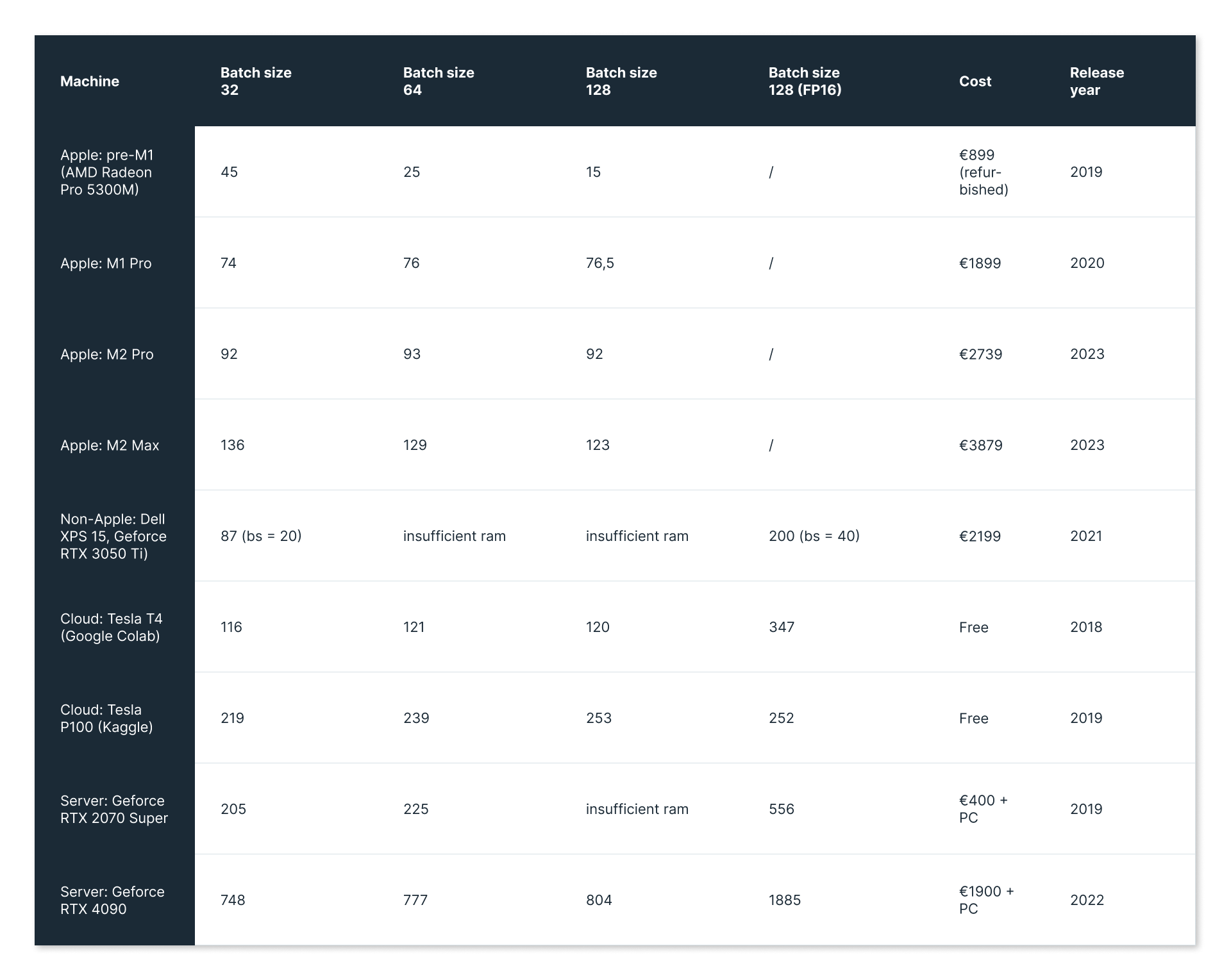

In this benchmark, we measure the number of images per second (rounded to the nearest integer). A general rule of thumb, which is quite logical, is that the more images you can process, the more memory you need, so we ran our benchmark for different batch sizes (32, 64, 128, and 128 fp16).

Results: Time to buy an M2?

Time to discuss the results of our benchmark!

We have divided our ‘machines’ into four groups to compare our results. First, our laptops can be divided into Apple MacBooks (the first four rows) and a Dell XPS 15. Thirdly, we have our cloud solutions. Last but definitely not least, we have our servers. Now, let’s look at the most significant differences!

Apple MacBooks

When looking at the MacBooks’ performance, we can clearly see an improvement with each generation. It also outperforms the Dell laptop, as it has insufficient ram for most of the batch sizes. However, even the most expensive (M2 Max) cannot compete with our servers. When compared with the free alternatives in the cloud, only the M2 Max performs about the same as Google Colab; however, Kaggle outperforms Apple.

Dell XPS 15

As we have already said, the M2 chips are better than the Dell laptop. We even had to reduce the batch size to 20 as it could not handle 32 due to insufficient ram. Apple wins this round due to its shared memory, which makes the Apple laptops way more powerful.

Cloud

We also ran our benchmark in the cloud, as we often use these solutions to train our machine learning models. More specifically, we tested on Google Colab and Kaggle. We use this as these tools offer free GPUs, with the advantage of using less power and not exhausting your devices. Of course, using the cloud isn’t always possible, especially when working with sensitive data (in this case, we would recommend your own device or a server). When it comes to the benchmark, the cloud solutions do not have the same performance. Google Colab performs as well as the M2 Max, whereas Kaggle works twice as fast.

Servers

Simply put: nothing compares to the Geforce RTX 4090 — it’s simply the best. It’s almost five times as fast as the new M2 Max. When you buy the latest hardware, your own server will always be better than any laptop or cloud tool.

Time to implement FP16, Apple?

We also benchmarked every non-Apple tool for mixed precision training, as this is something Apple is still lacking. Basically all our benchmarks were ran on a single-precision floating-point format (FP32), but for neural networks, sometimes FP16 is enough. In these cases, mixed precision training is the way to go — this identifies the steps that require full precision and uses the FP32 for only those steps while using FP16 everywhere else. This has numerous benefits; they require less memory, less bandwidth and they’re much faster. In our benchmark, you see that the results show way better outputs in FP16. Apple hasn’t implemented this so far, but we don’t see a reason for them not to. Maybe next release?

To upgrade or not to upgrade?

Our honest advice? M2 is definitely good for developing and testing models locally, and it’s especially better than its non-Apple counterparts. However, if you want to do extensive training, it’s best to switch to the cloud or your own servers. Moreover, we would recommend using a server, as this will make your laptop last way longer.

And when comparing the M2 Pro and Max, especially keeping the price tag in mind? We believe the difference is too minimal for a big price difference. We would rather invest this money in a server — but everything depends on your needs.

In this benchmark, we only tested training a model, and we realize a data scientist does much more. When it comes to other tasks a data scientist typically executes — analysis, pre-processing, and so on — these new laptops are definitely top-notch, so there is always room to negotiate with your boss. 😉